Streamline your data pipelines to support real-time decision-making capabilities. Deploy best practices across your Big Data stack.

-

Prototype Development

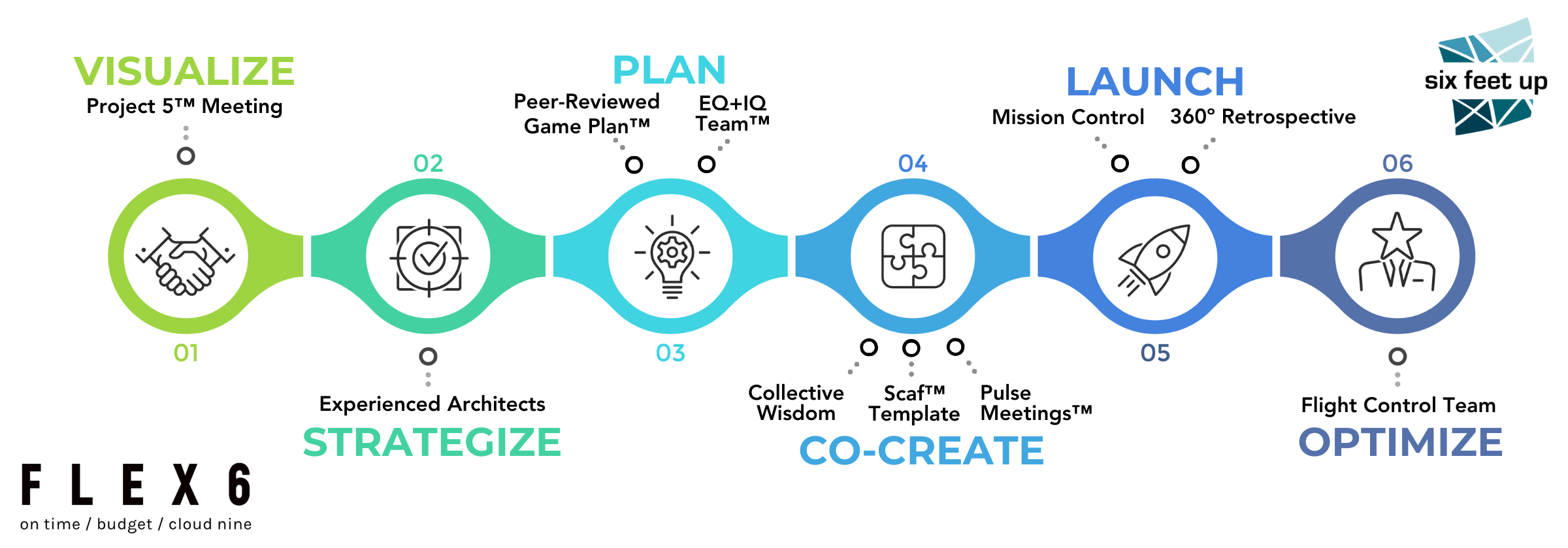

An iterative approach through quick proof-of-concepts will help validate your Big Data innovations faster than a waterfall approach.

-

Repeatable Deployments

Go from Jupyter Notebooks to Cloud-native containers. Automate the delivery of your pipeline using Continuous Integration/ Continuous Deployment (CI/CD). Prevent drift in your architecture using Infrastructure as Code (IaC) tools like Terraform.

-

Observability

Improve your ability to troubleshoot issues, and find performance bottlenecks by adding instrumentation in your ETL process. Roll up the data into dashboards for real-time decision making.

-

Data Pipeline Optimization

End-to-End Quality

Professional methods and tools to ensure robustness, dependability, functional safety, cybersecurity and usability:

- Agile process

- CI/CD

- DevOps pipelines

- Secrets management

- Source control management

- Automated testing

- Documentation

- Instrumentation

- Monitoring

Technology Expertise

20+ years of software development and deployment experience with a focus on:

- Python / Django / NodeJS

- AWS / GCP / Azure

- Databricks / Airflow

- React / Angular

- PostgreSQL

- scikit-learn

- Kubernetes / Terraform

- Linux / FreeBSD

Thanks for filling out the form! A Six Feet Up representative will be in contact with you soon.